Drone Mapping and Photogrammetry

I have been flying drones since 2014. In 2020, I became a certified commercial drone pilot pursuant to the FAA Small UAS (Part 107) requirements and have maintained my license to the present. I have used drone technology in archaeological projects for aerial photography, GPS-based autonomous modeling, and landscape depth map generation. At Huqoq, I used GPS-based drone photogrammetry to generate 3D models to track the evolution of the excavation landscape in ArcGIS.

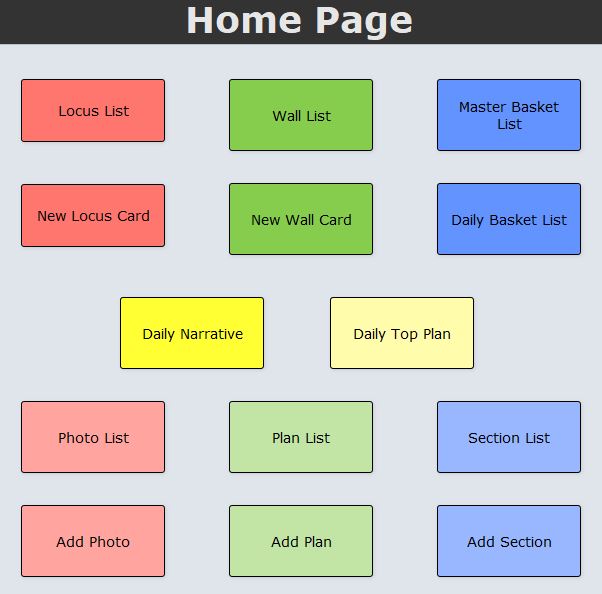

Archaeological Databases

In 2016, utilizing FileMaker Pro and JavaScript, I built a relational database for use at the Huqoq Excavation Project to organize all data gathered in the field and in the lab. Prior to building the database, I met with a range of users to gather requirements and review initial designs. After approval, I built the database along with a remote, cloud-based hosting solution. tested the database in the field using satellite internet and cellular hotspots, and after comparing upload and download speed, we opted to use hotspots as our remote connection solution. I have actively maintained the database since building it, providing updates with new informational hierarchies, accessibility-enabled layouts, and additional tagging functionality. I used this database as a launching point to build a similar system for the ‘Ayn Gharandal Archaeological Project in Jordan. The next iteration of the database is underway and is being built as an open-source web application using the MERN stack (MongoDB, Express.js, React.js, and Node.js) for a fully online, modular database that can be adapted to specific excavation needs.

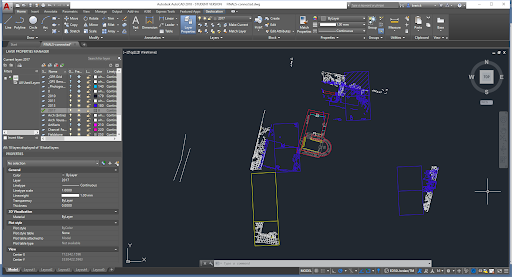

GPS Architectural Plotting in AutoCAD

Shooting points with a Topcon GPS unit, I plotted the architectural features of the third-century CE remains of the Roman military fort of ‘Ayn Gharandal in southern Jordan. In addition to using these points to generate architectural maps for the excavation report, I used the data to create 3D visualization of the fort for use in virtual reality simulations. I created the 3D models in Blender and the VR experience in Unity. The AutoCAD drawing, 3D model, and VR experience were extensively used for undergraduate, graduate, and public education.

Museum Objects and Virtualized Space

I created 3D visualizations of classical and ancient Near Eastern artifacts from the NC Museum of Art, the Ackland Art Museum, and the UNC Special Collections Library to present and lead students and the general public through an exercise of examining material culture and deciding how to present artifacts in virtual environments navigable with the Oculus Meta Quest. I also created multiple 3D prints of artifacts to help relay the physicality of material culture in teaching.

Landscape Archaeology and Ritual Baths

Partnering with the University of Helsinki, I created accurately-sized, 3D visualizations of ritual baths from the ancient village of Gamla and the ancient city of Sepphoris. Combining volumetric measurements and X-ray Fluorescence (XRF) readings taken on each bath, we determined likely fill levels. We then compared the volumetric and fill-level data to historic rainfall estimates with river and drainage systems to understand the changing ecological landscape and what affect those changes may have had on the people living in the regions.

Monumental Building Illumination Project

Considering the question of how illumination from internal light sources may have affected a community’s use and experience of monumental classical buildings, I created visualizations of series of oil lamps and polycandela from archaeological reports. After deciding on a set of oil lamps and polycandelon to use, I created PLA and resin facsimiles of the lamps with 3D printers. I then partnered with a scientific glass blower who used the prints to create six glass versions of the oil lamps. Each lamp was filled with oil and a flax wick. For the first series of tests, I used olive oil and for the second series of tests, I used flaxseed oil. Using a lux meter, I took luminosity readings in a perfectly dark room of the fully-lit polycandelon. I then synthesized the generated data to create internal lighting conditions in a virtual reality simulation. As part of the simulation–simply as an exploratory study–I imported texts and invited students and researchers to try to read them using the HTC Vive and Meta Quest VR headsets under different lighting conditions.

VR Lecture Series

During the period of remote learning between 2020 – 2022 due to COVID, I gave a series of immersive lectures exploring ancient monumental buildings and archaeoastronomical material culture. Models were made using photogrammetry and cleaned in Blender and re-skinned in MeshLab. I imported the models into Mozilla Hubs and generated a “multiplayer” room where students could join on their computer, VR equipment, or through remote sharing. I began each lecture by inviting students to explore the space they embodied and write down any questions they had. We then discussed their questions, which most often centered on elements they noticed while exploring the space and the technology they were using.

Nepal Cultural Heritage Visualization Partnership

In 2019, I traveled to Kathmandu, Nepal for two weeks, where I led a weeklong series of workshops in partnership with local communities aimed at the preservation and documentation of material culture. Following the workshop, we traveled the Kathmandu Valley, partnering with monasteries and cultural heritage sites to produce 3D visualizations using photogrammetry. The models that we made were shared widely, and I incorporated them into a learning simulation first using Unity and then UnrealEngine.